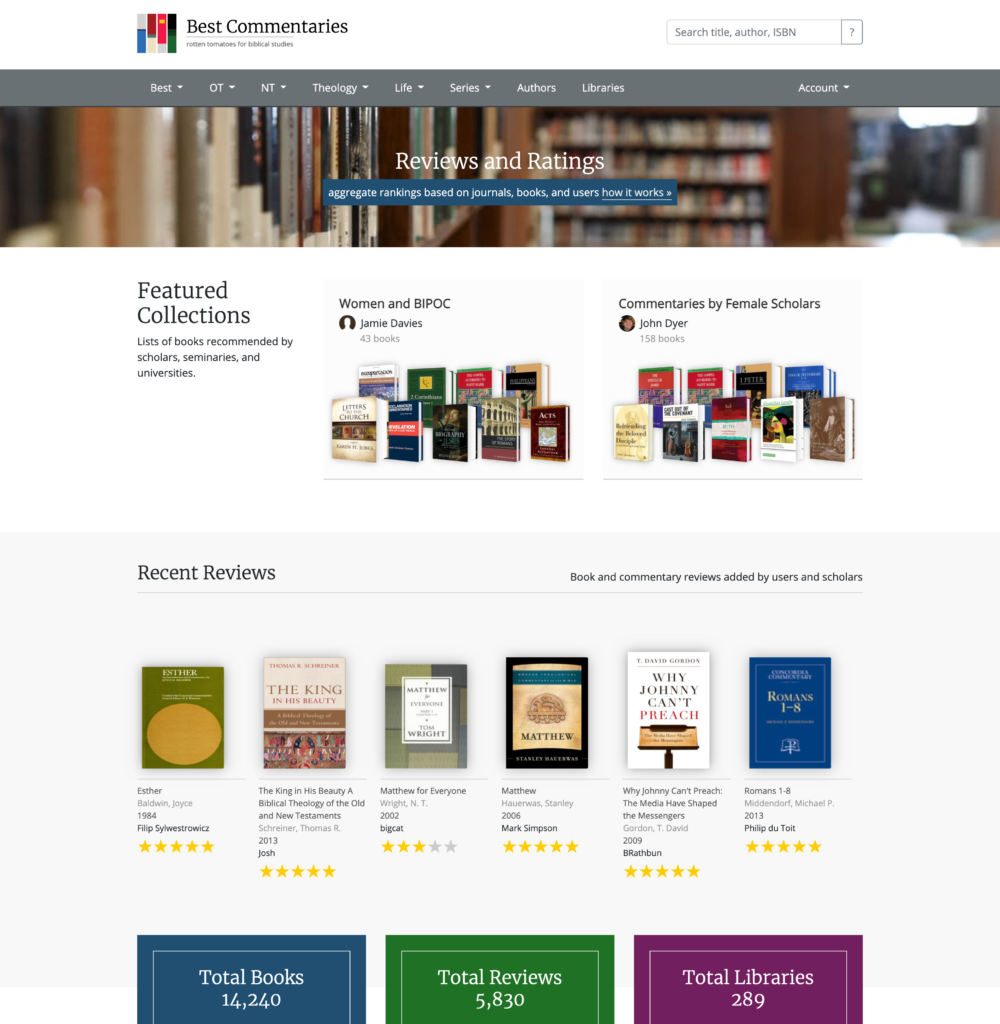

I’m happy to announce that today, I’m releasing the first major update to bestcommentaries.com in almost 10 years.

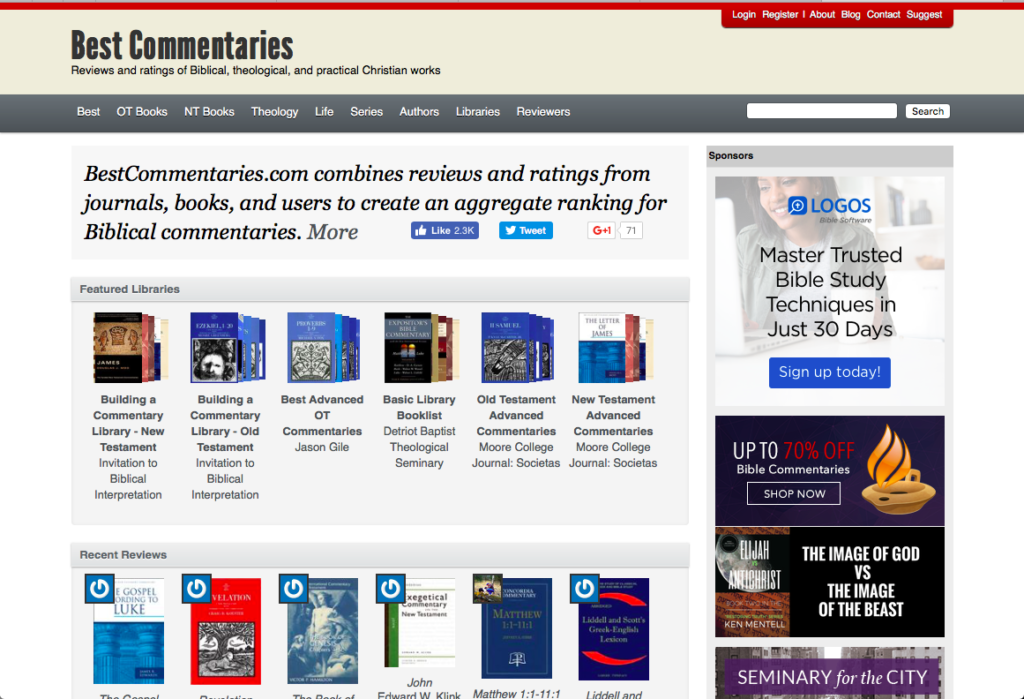

I originally created bestcommentaries.com in 2008, as a project for one of my final seminary classes. Then in 2011, I did a fairly major technical and feature update, and that website has remained in place for a decade.

The site originally had just a few hundred books and visitors, but it has grown to almost 15,000 books and 6,000 reviews over a variety of categories, and it has enough traffic that I think it’s giving a good number of people a place to start when looking for commentaries.

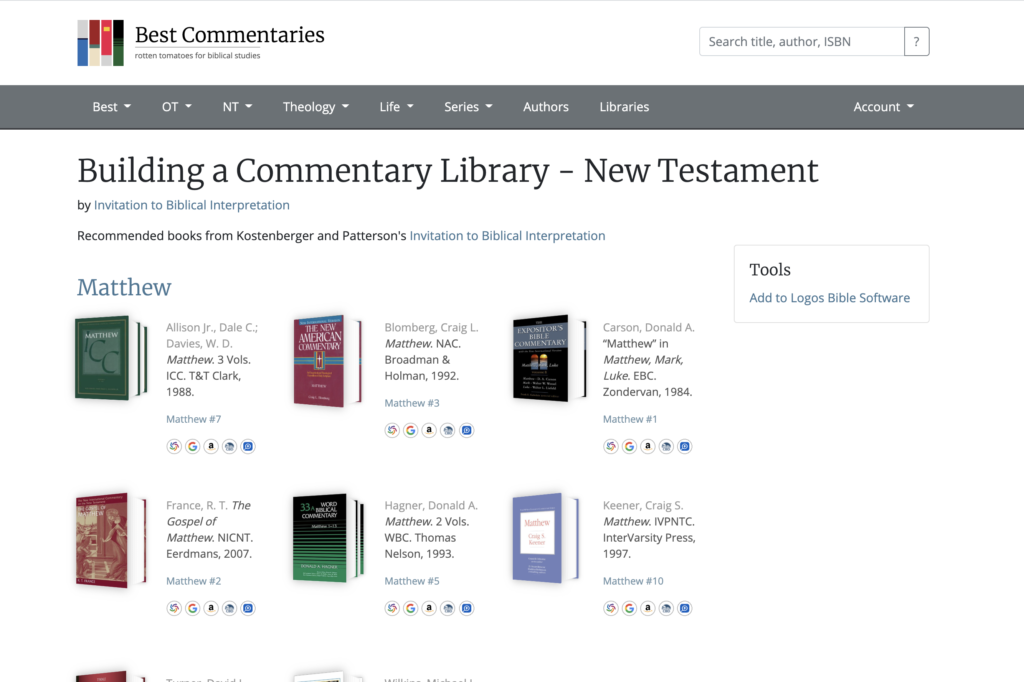

New Look

As a lover of books, I’ve always wanted bestcommentaries.com to hint at the physicality of the books, their size, heft, and feel. The original version of the site had some 3D effects using Flash (remember Flash?), and this new version has a few visual cues that call back to that.

If you poke around, you’ll find some little animations that attempt to represent the size and shape of the book. I can’t replicate that library smell, but using the number of pages and dimensions of the books (including two-volume sets), you’ll be able to see that square shape of a Hermenia volume or the familiar thickness of a Word Commentary.

New Features

The main features of the site haven’t changed—users can still create accounts, add reviews, and create lists of their own libraries or recommendations. These reviews and lists form the data for the recommendation algorithm, and each review makes the site stronger. I also continue to scour the web and books for publicly available reviews that I hope increase the reliability of the ratings.

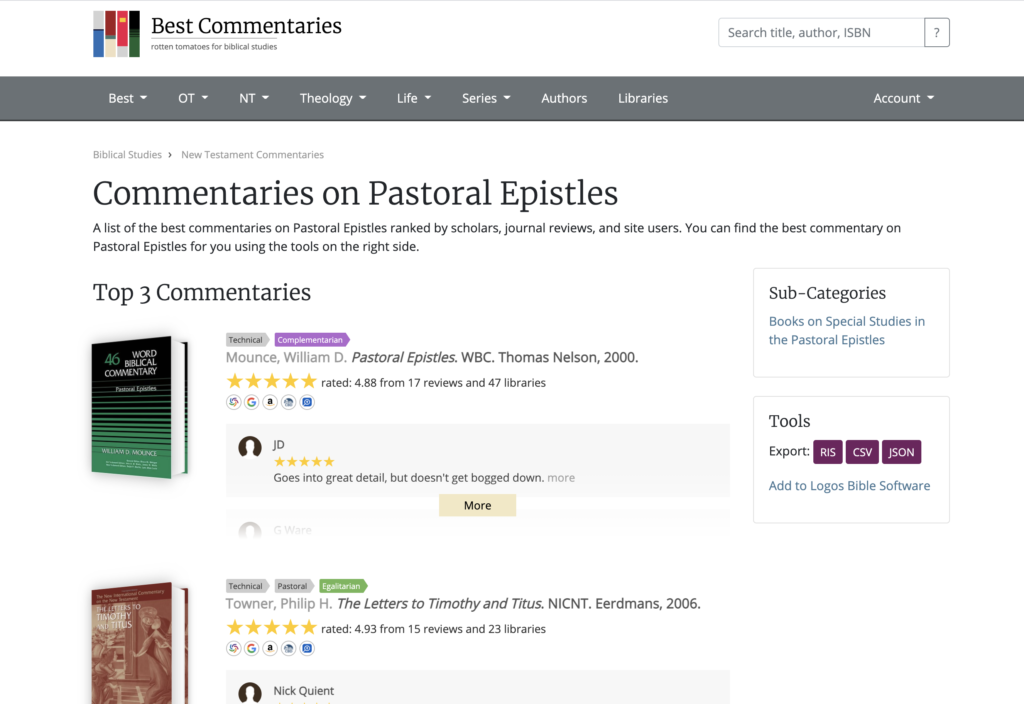

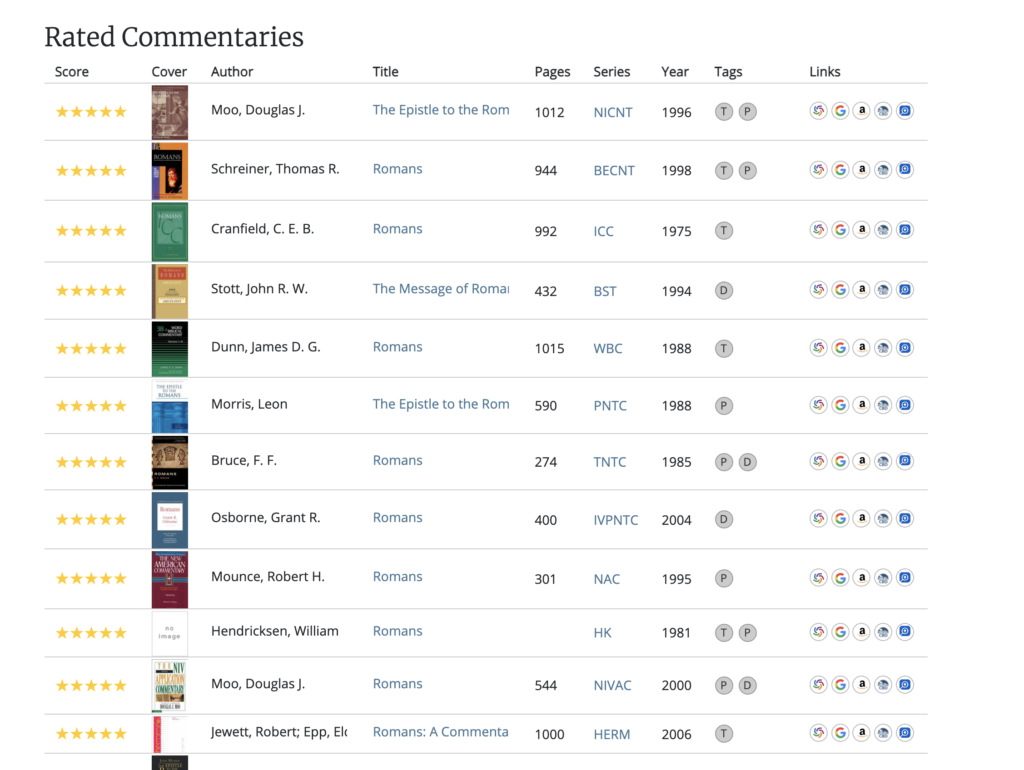

This updated version also has lots of little added details that I hope make it more usable. Since really isn’t such a thing as “the best commentary on book X” (I love Princeton’s statement, “Recommending commentaries and monographs on biblical books is something like recommending restaurants in a large city. Possibilities are nearly endless and depend in large measure on one’s taste and interests.”), there are filters to help you find (T)echnical, (P)astoral, and (D)evotional commentaries. The books lists pages (e.g., Best Commentaries on Romans) now includes snippets from the last several reviews on the highest rated commentaries to give an immediate sense of why those are good places to start.

The main list of all commentaries now includes the page count and links to other databases, Amazon, CBD, and Bible software apps. You can also download the lists into Zotero, EndNote, and other bibliographic engines using the Export tools on the right.

In the past, these pages included an aggregate score that ranged from 60-100 where the highest rated book on the site had a 100 and the other ones ranged in between. I’ve removed this in favor of just showing the star rating that takes the average of the reviews and weights it by other recommendations like the number of collections it is in. Hopefully, this will make more sense but it gets at a much deeper issue.

The Algorithm Problem

I hope these changes modernize the site and make it more usable, but they don’t address one of core struggles I’m still working on: the resonate power of cultural and algorithmic bias. In fact, I think this site is a good example of how data is never just data, and why there’s always more going on under the surface. (examples: Amazon’s biased hiring algorithm, problems with criminal behavior predictions, Google’s exploration of gender in text).

When I first started the project, I thought that if I just collected a bunch of lists, I could generate a simple objective aggregate ranking. If there are three lists, and list 1 recommends A and B, list 2 recommends B and C, and list 3 recommends B and D, then the data would show that B was the best overall pick.

What I didn’t realize at the time was that there were all kinds of cultural and technological assumptions behind that idea. It didn’t occur to me at first that most of the lists were produced by a largely homogenous group—evangelical writers and scholars. Having now studied the behaviors of American evangelicals for almost a decade, it makes sense that my tribe would be the ones who generate such lists. It can be understood as a kind of gatekeeping or safekeeping, protecting the folk from reading the wrong things. But unlike the often combative, defensive evangelical leaders who make the news, most evangelical scholars recommend using a mix of both “critical” and “conservative” commentaries. However, even this labelling and categorizing is not a neutral, objective way of looking at scholarship, and is itself built on a set of assumptions, however helpful they are.

There is also a more subtle issues that introduce a bias stemming from a complex combination of theology, data, and time. Some of the more popular commentary recommendations lists started being published in the 1970s (e.g., D.A. Carson’s NT list in 1976 based Thiselton’s work) and more came through the 1990s (e.g., Longman’s OT list in 1991, Glynn’s comprehensive list in 1994). As new scholarship comes out, some of these books have received updates, but they also tend to be weighted toward commentaries that were around when they first came out. Even with the updates (Carson and Longman’s latest was 2013), as these recommendations lists are passed around evangelical websites and books, those early recommendations tend to be self-reinforcing. The more times a book gets recommended, the more likely it is to get recommended again (note for example how many highly recommended a plagiarized book on Ephesians).

Although newer commentaries are not necessarily better than older ones, the inertia of older, more frequently rated commentaries means newer works have a hard time cracking the top. It’s also important to note that when those lists were first published, biblical scholarship (especially conservative scholarship) tended to be dominated by white men, so the recommendations are weighted in that direction. This history paired with the social algorithm of recommendations creates a systemic bias against female and minority authors. An example of this comes in commentaries on Mark, where William Lane’s excellent work from 1972 sits at #2, while several commentaries from female authors with more recent scholarship (Collins, 2007; Beavis, 2011) haven’t moved up the rankings as quickly as male authors writing at the same time (Stein, 2008).

What I am attempting to explain are two interrelated issues. First, is the data itself. For the site to make more diverse (and better) recommendations, it needs more books and more reviews of those books. If a bunch of people created accounts and all highly rated a group of commentaries those would move up or down pretty quickly (like the review-bombing that happened at Rotten Tomatoes). Second, is the algorithm and how it weights that data. Or more precisely, how I weigh that data. (To a certain extent, this could be called Artificial Intelligence (AI), but the code is simple enough that it’s still human readable/blameable). Should newer books and more recent reviews more weight? Should historically marginalized voices receive a multiplier? Is there data that should not be counted because it functions as noise/reverberants?

To help alter this trajectory, I’ve been looking at both data-driven and algorithmically-driven ways to highlight a wider range of scholarship first through the data itself. For example, I’ve added some newer recommendations lists like Jamie Smith’s NT commentaries by women and BIPOC scholars, Nijay Gupta’s recommendations (now in a fantastic book), Princeton Seminary’s wide-ranging list (including important monographs), and Covenant Seminary’s list of Non-Western Commentaries (I’d encourage all libraries to adopt and expand). I’ve also been maintaining a list of all the commentaries written by female scholars (see womenbiblescholars.com or Kristen Padilla’s site), and have several more sources lined up (which can take a lot of time).

On the user interface side, one way I’ve attempted to solve the issue of newer commentaries is to create a list of “New and Notable” which shows commentaries written in the last 5 years that have at least a 4+ rating. For the book of Ephesians, this surfaces Lynn Cohick’s excellent work in the NICNT (replacing Bruce’s 1984 version) and Willie Jennings shows up under Commentaries on Acts. It also shows visitors Goldingay under Genesis, Schreiner under Revelation, and so on.

As I work on broadening the dataset, I’m also considering ways to tweak the ranking algorithm to take this data into account in a meaningful way. One of the most often repeated ethical commands in the Hebrew scriptures is against “unjust scales” (The Law, Lev 19:26; the Prophets, Ezek 45:10; Hos 12:7; Mic 6:11; Amo 8:4-8; and the Writings, Prov 11:1; 16:11; 20:10; 20:23) and “differing weights.” While a sword can kill an individual, biblical ethics also is also concerned with tools and devices that can affect entire groups of people, and this includes algorithms.

I’m also exploring ways make the algorithm as transparent as possible. The “About” page has always had the basic math listed, but I’d also like to add an explanation to each book page making it very clear how the ranking and score were calculated. These ideas are still a work in progress, and I welcome input and criticism.

Further Exploration

For those interested in looking more deeply into the ethics and issues, there are two recent books I’d recommend. The first is Kearns and Roth’s The Ethical Algorithm: The Science of Socially Aware Algorithm Design which explores game theory and a host of issues related to algorithm design. I also appreciate Heidi Campbell’s Digital Creatives and the Rethinking of Religious Authority which explores how religious people draw on Shirky’s concept of “algorithmic authority” (not creating algorithms per se, but understanding how Google and Facebook work) to weld power for their ministries and themselves (I gave a 10 minute overview of Campbell’s ideas).

Please let me know what you think of the site and if you have additional resources, recommendations, or feedback. And as always – go leave a review! https://www.bestcommentaries.com